Blog Posts

Applied Behavior Analysis (ABA) is a widely recognized approach used to teach new skills and modify behaviors, particularly for individuals with developmental disabilities, including autism. One of...

The world of creative thinking and problem solving prompts serve as invaluable tools for guiding thought processes and stimulating innovative ideas. But not all prompts are created equal. Some are...

The rise of artificial intelligence (AI) has given birth to a multitude of new career opportunities, one of which is the role of an AI prompt engineer. If you’ve been hearing the term “AI prompt...

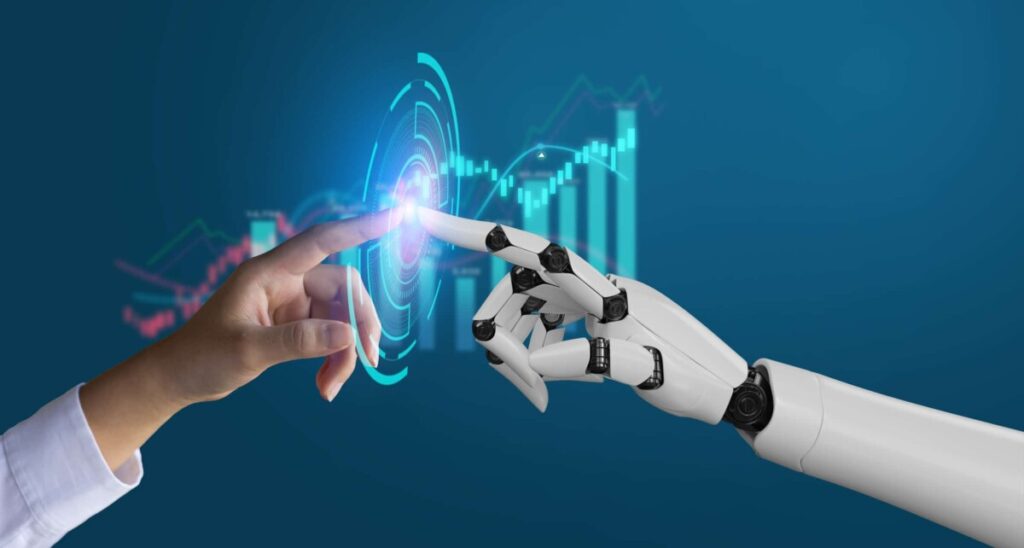

In brand new rapid-paced digital international, efficiency is prime. Whether it’s in business, technology, or day by day life, the want to optimize tactics and results has never been greater. Enter AI...

In the fast-paced virtual world, developing compelling content that sticks out is essential. But what if you could add a touch sweetness to your content creation system? Enter “frosting AI...

The pace of creativity is evolving at an unprecedented rate in this virtual age. Artists are actually exploring new mediums and tools to express their visions, and one of the most interesting trends...

Large Language Models (LLMs) have become an integral part of various applications, from chatbots to content generation. However, ensuring their effectiveness and reliability requires rigorous...

In the swiftly evolving panorama of business, where efficiency and precision are paramount, Artificial Intelligence (AI) has emerged as a transformative force. Among the various aspects of AI, Prompt...

Language models are anywhere—whether or not we’re using a search engine, getting recommendations from our cellphone’s keyboard, or talking to digital assistants. But how do we know if...

With the rapid development of digital content creation, the advent of AI-powered tools has changed how content is created, refined and optimized.. One of the most potent tools at a content...

As the demand for AI-generated content continues to grow, the importance of effective prompt creation cannot be overstated. Crafting well-designed prompts is crucial for eliciting high-quality...

In the rapidly evolving landscape of digital content creation, the use of artificial intelligence (AI) has become a game-changer. Among the many tools available, successful AI prompts stand out as a...

Prompt injection is an increasingly significant concern in the fields of artificial intelligence (AI) and natural language processing (NLP). As AI systems become more integrated into various...

Midjourney allows you to make unbelievable art using only words! It’s being used by millions, but it all depends on the prompt. Think of them as instructions for a creative assistant. Unclear prompts...

Think of Large Language Models (LLMs) as really smart suggestions that have been trained on lots of texts. They’re quite good at understanding human languages and can even generate them, so they’re...

Think about a machine that can write poetry, translate languages in an instant, or even come up with an engaging marketing copy. That’s exactly what GenAI Prompt Engineering is capable of. It’s...

Imagine being able to write compelling marketing copy, translate languages on the fly, or even generate a creative story out of thin air using artificial intelligence. Thanks to Large Language Models...

Imagine using a super-powered AI writer that creates killer content in seconds, sounds pretty cool, right? Well, there can be a hidden downside to these large language models (LLMs). While...

Imagine the coolest AI assistant you’ve ever seen in a movie – that’s what Large Language Models (LLMs) are becoming. These powerful tools are revolutionizing software development...

When it comes to the revolutionization of industries, AI is stepping up its game. However, a covert menace is emerging: AI prompt injection. Malicious entities can exploit AI by manipulating...

You can instruct an AI system just like you would give a friend a recipe to cook by. But in this case, instead of following one set course there are many paths that could be taken. This is where Tree...